Manos Theodosis

PhD candidate, Computer Science

CRISP Lab, Harvard University

etheodosis@g.harvard.edu

Curriculum Vitae (Updated Sep 2024)

I am a sixth (and final) year PhD student in Computer Science at Harvard University, advised by Demba Ba. My research interests revolve around deep learning, mathematics, and optimization. I'm particularly interested in representations, geometry and topology, and dynamics in deep learning. During the summer of 2021 I interned at Amazon Web Services where I had the pleasure to be hosted by Karim Helwani. I finished my undergraduate studies at the National Technical University of Athens in Greece, where I conducted my thesis in tropical geometry under the supervision of Petros Maragos.

Mentoring: As a first-generation student, I'm passionate about providing help and guidance to people who have no access to it. This led to the creation of a mentoring initiative, MentoRes, along with Konstantinos Kallas, that intends to help people applying for PhD programs in Computer Science or engineering fields. I'm always happy to chat and give advice, even if it's unrelated to PhD applications.

Personal: I was born and spent the first couple of years of my life in Crete. I then moved to Athens, where I spent most of my life before coming to the US for my PhD. I enjoy quotes a lot and keep lists of my current favorites. My favorite book is "The Cider House Rules" by John Irving, I'm a movie buff with a soft spot for tasteful thrillers and horror movies, and I enjoy skill-based video games. I play board games weekly, mainly social deception and minmax euro games. I love national parks, but unfortunately my quest to visit all of them has lagged behind due to COVID.

Projects

Equivariance

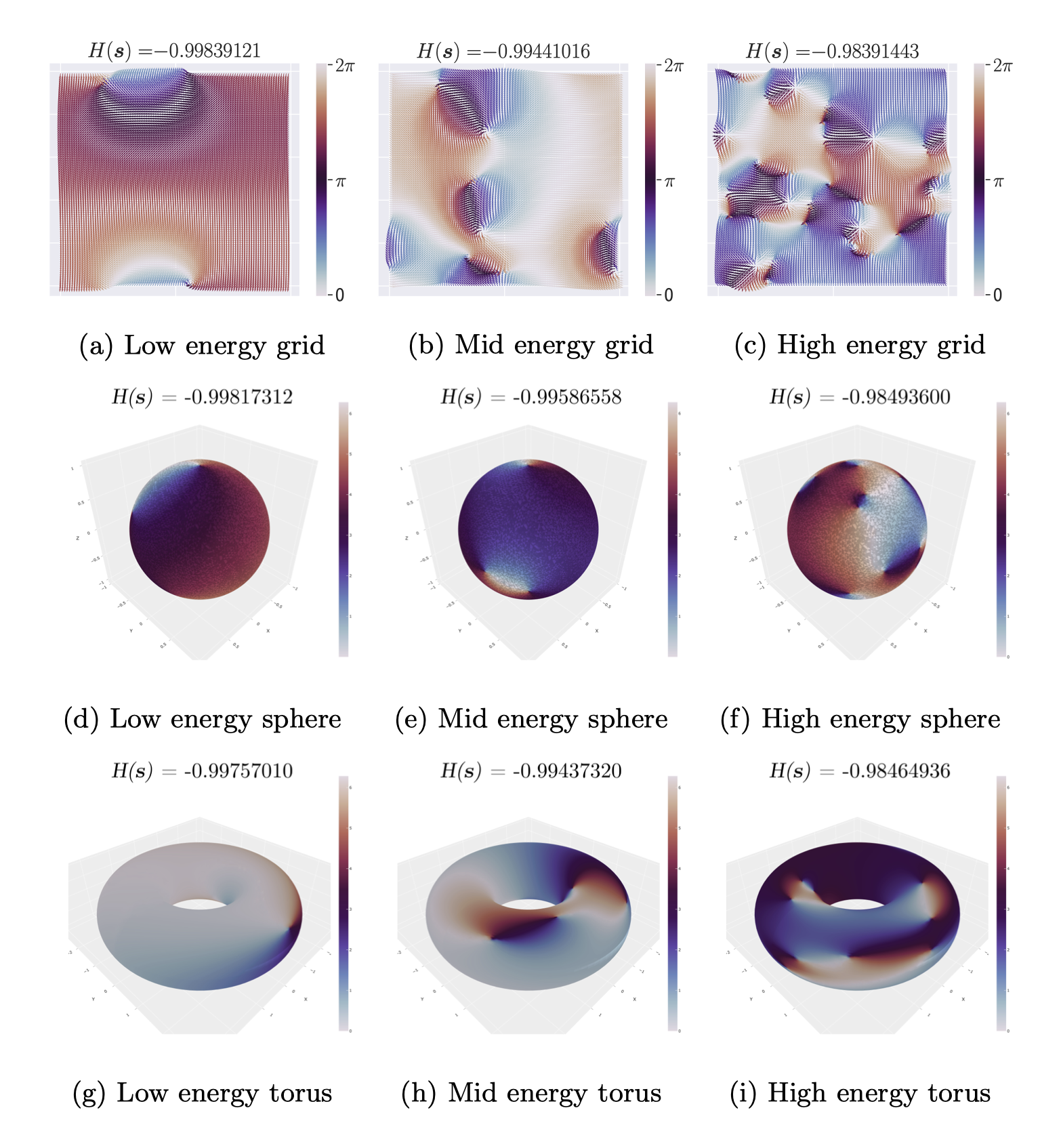

Many problems have physical constraints, such as invariance to rotations or the choice of reference frame. Incorporating these constraints, or inductive biases to neural architectures is not trivial and requires careful development of the neural architectures, and the resulting networks have significantly reduced model sizes and increased performance.

Relevant papers:

1. Theodosis, Ba, Dehmamy. "Constructing gauge-invariant neural networks for scientific applications", in GRaM and AI4Science Workshops at ICML, 2024.

2. Theodosis, Helwani, Ba. "Learning group representations in neural networks", in arXiv, 2024.

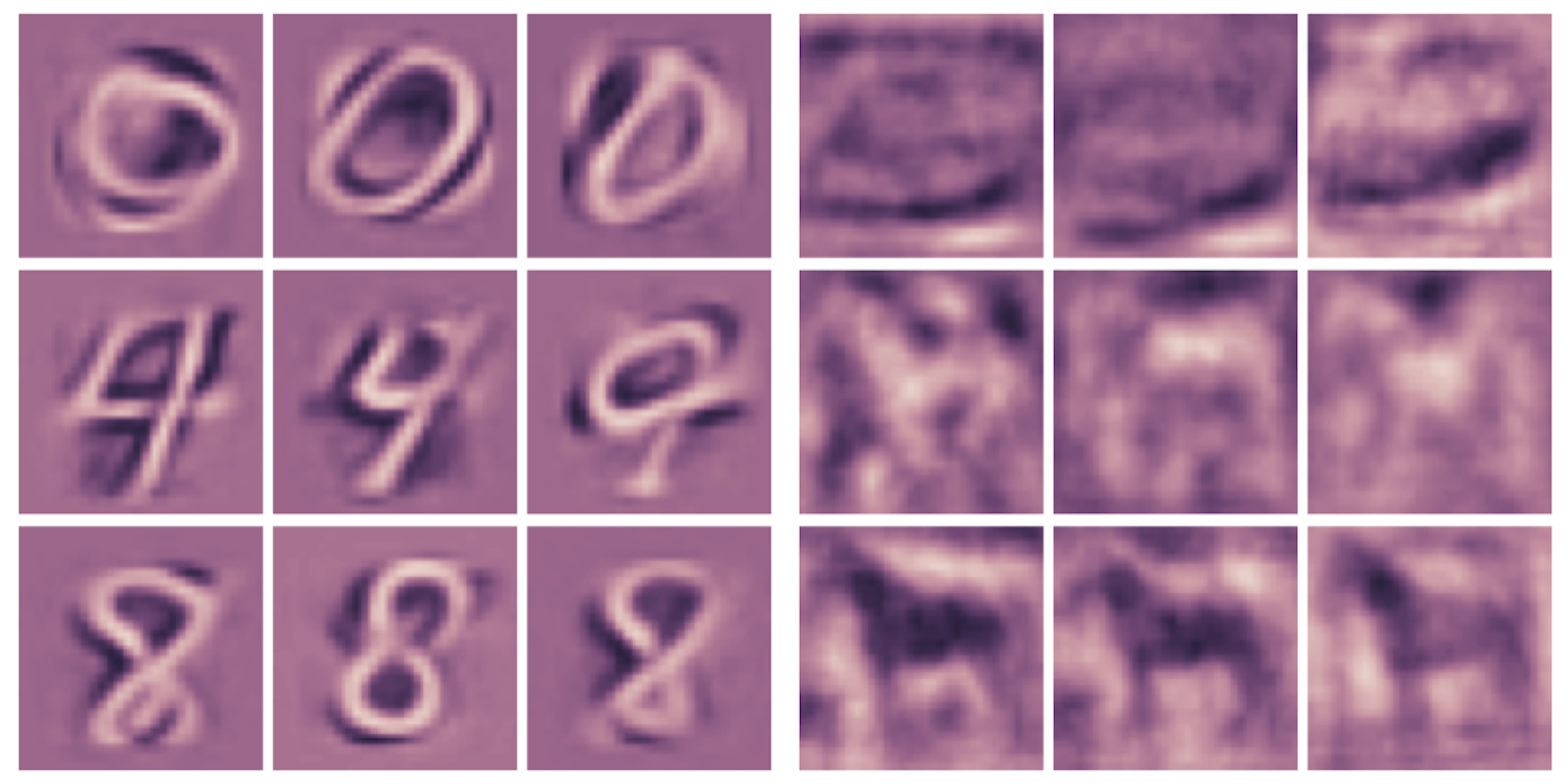

Structured representations

Learning meaningful representations is instrumental for most learning problems. Representations with appropriate properties can reduce model sizes, improve performance. and be more interpretable by being better suited to the task at hand.

Relevant papers:

1. Tasissa, Theodosis, Tolooshams, Ba. "Discriminative reconstruction via simultaneous dense and sparse coding", in TMLR, 2024.

2. Theodosis, Tolooshams, Tankala, Tasissa, Ba. "On the convergence of group-sparse autoencoders", in arXiv, 2020.

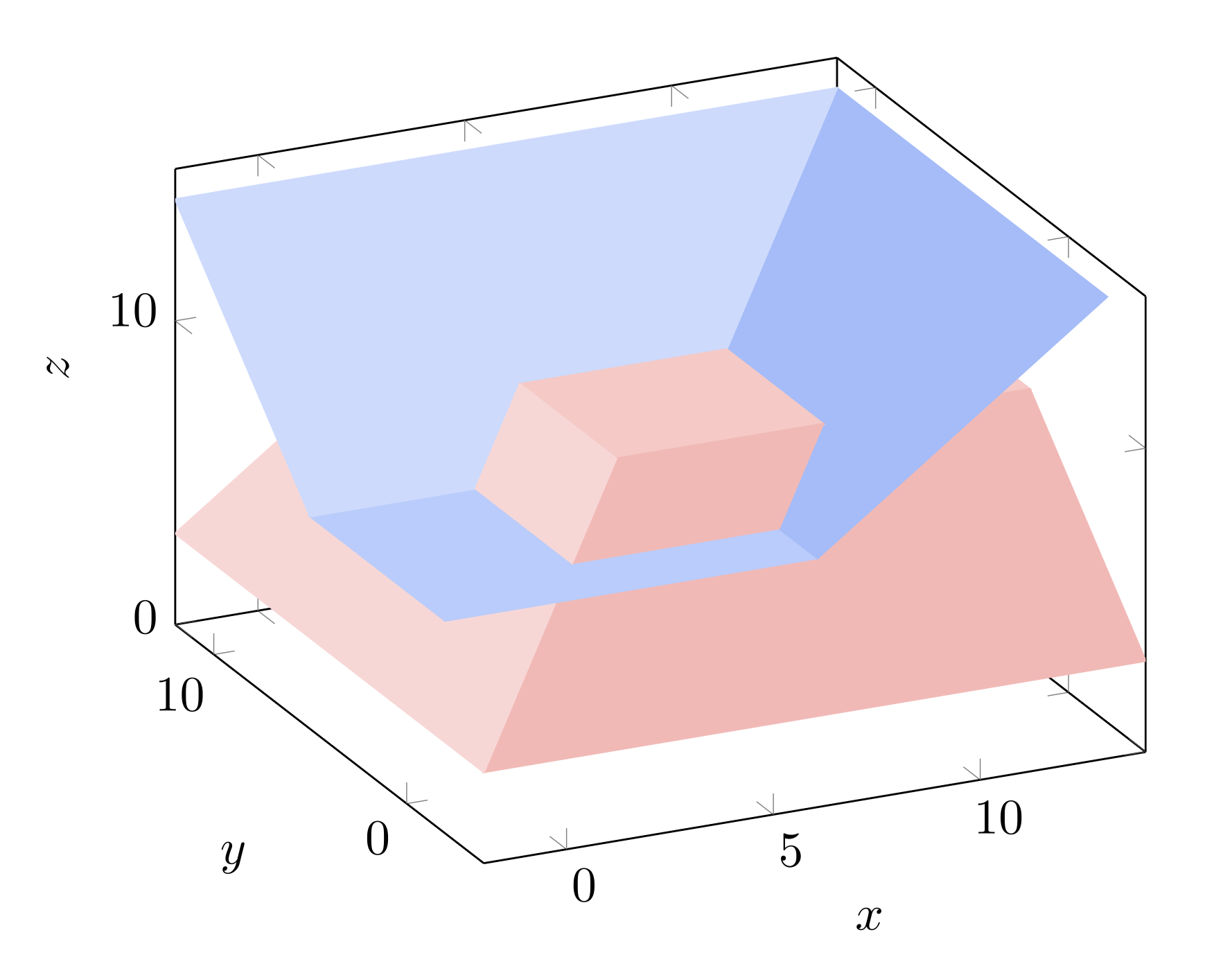

Tropical geometry

Linear algebra powers most learning systems today; however, there are nonlinear algebras that are more expressive and better suited for certain studies, such as the expressivity of neural networks. These algebras, through polyhedral geometry, facilitate the concise description of many problems in science and engineering.

Relevant papers:

1. Maragos, Charisopoulos, Theodosis. "Tropical Geometry and Machine Learning", in Proceedings of the IEEE, 2021.

2. Maragos, Theodosis, "Multivariate tropical regression and piecewise-linear surface fitting", in International Conference on Acoustics, Speech, and Signal Processing, 2020.

4. Theodosis, Maragos. "Tropical modeling of weighted transducers algorithms on graphs", in International Conference on Acoustics, Speech, and Signal Processing, 2019.

Select publications

- Theodosis E., Ba D., Dehmamy N. "Constructing gauge-invariant neural networks for scientific applications", in GRaM and AI4Science Workshops at ICML, 2024.

- Tasissa A., Theodosis E., Tolooshams B., Ba D. "Discriminative reconstruction via simultaneous dense and sparse coding", in TMLR, 2024.

- Theodosis E., Helwani K., and Ba D. "Learning group representations in neural networks", in arXiv, 2024.

- Theodosis E. and Ba D. "Learning silhouettes with group sparse autoencoders", in International Conference on Acoustics, Speech, and Signal Processing, 2023.

- Maragos P., Charisopoulos V., Theodosis E. "Tropical Geometry and Machine Learning", in Proceedings of the IEEE, vol. 109, no. 5, pp. 728-755, 2021.

- Maragos P., Theodosis E. "Multivariate tropical regression and piecewise-linear surface fitting", in International Conference on Acoustics, Speech, and Signal Processing, 2020.

- Theodosis E., Tolooshams B., Tankala P., Tasissa A., Ba D. "On the convergence of group-sparse autoencoders", in arXiv, 2021.

- Theodosis E., Maragos P. "Tropical modeling of weighted transducers algorithms on graphs", in International Conference on Acoustics, Speech, and Signal Processing, 2019.

Talks

- Learning group representations in neural networks

IAIFI Journal Club Talk, October 3 2023 - Learning cyclic groups in neural networks

Baylor/Rice/University of Houston Journal Club Talk, September 8 2023 - Constraining neural networks for inverse problems

DISC & TIAI Annual Symposium, April 20 2023 - Constraining neural networks to craft representations

IAIFI Lighting Talks, March 17 2023